Augintel

Project Details

Client: Augintel

Team: Executive Team, Engineering, Child Welfare Professionals

Tools: Figma, Miro, Adobe Suite

Tech: React, LLaMA

Ethical AI platform ensuring fair, interpretable insights for child welfare

Context

Augintel develops AI-powered tools that help caseworkers understand years of complex case notes. The mission is profound: help overburdened social-service professionals gain clarity faster, avoid retraumatizing families, and make better-informed decisions.

I joined as the company’s design lead shortly after the departure of its first designer, inheriting a promising product that needed more coherence, empathy, and credibility.

The work took place during a cultural turning point for AI—when public trust was being eroded by stories of algorithmic injustice in criminal sentencing, insurance scoring, and policing. In this climate, design had to do more than polish an interface—it had to earn belief through truthfulness.

My Role

As Design Lead, I acted as both researcher and systems designer:

- Conducted in-depth field interviews with child welfare leaders and front-line workers, including the Allegheny County Department of Human Services

- Established a repeatable user research practice and cross-functional synthesis methods

- Created a cohesive design system to unify a fragmented UI and accelerate development

- Redesigned case summary and “facts of interest” workflows, giving workers clearer entry points into long-running cases

- Collaborated with NLP engineers to surface model blind spots and edge cases, influencing how AI outputs were generated and presented

Design Challenges

Reducing Mistruths, Not Just Misinterpretation

Many charts—sparklines and micro-histograms—were visually appealing but semantically misleading. They could overemphasize volatility or imply causality where there was none. I fine-tuned the visual language to prioritize semantic accuracy over graphic density, adding contextual text and subtle interaction affordances that aimed to help users interpret data responsibly.

Pursuing True Fairness

My personal goal extended beyond usability: to help the team identify bias, surface blind spots, and strive toward fairness—an impossible but necessary pursuit. I introduced review frameworks that helped designers and data scientists analyze how NLP summaries performed across demographic and linguistic boundaries, pushing for transparency and humility in the model’s presentation.

Designing for Emotional Load

Child welfare work is emotionally taxing. I focused on simplifying design elements, finding resonable ways to use spacing and white space, and balancing interaction density to create a calmer rhythm—reducing cognitive noise. My goal was to allow workers to focus on nuance, not navigation.

Credit to the wonderful design team that preceded me. My primary contributions to the UI can be found in the product’s search results and tabular narrative data displays.

Impact

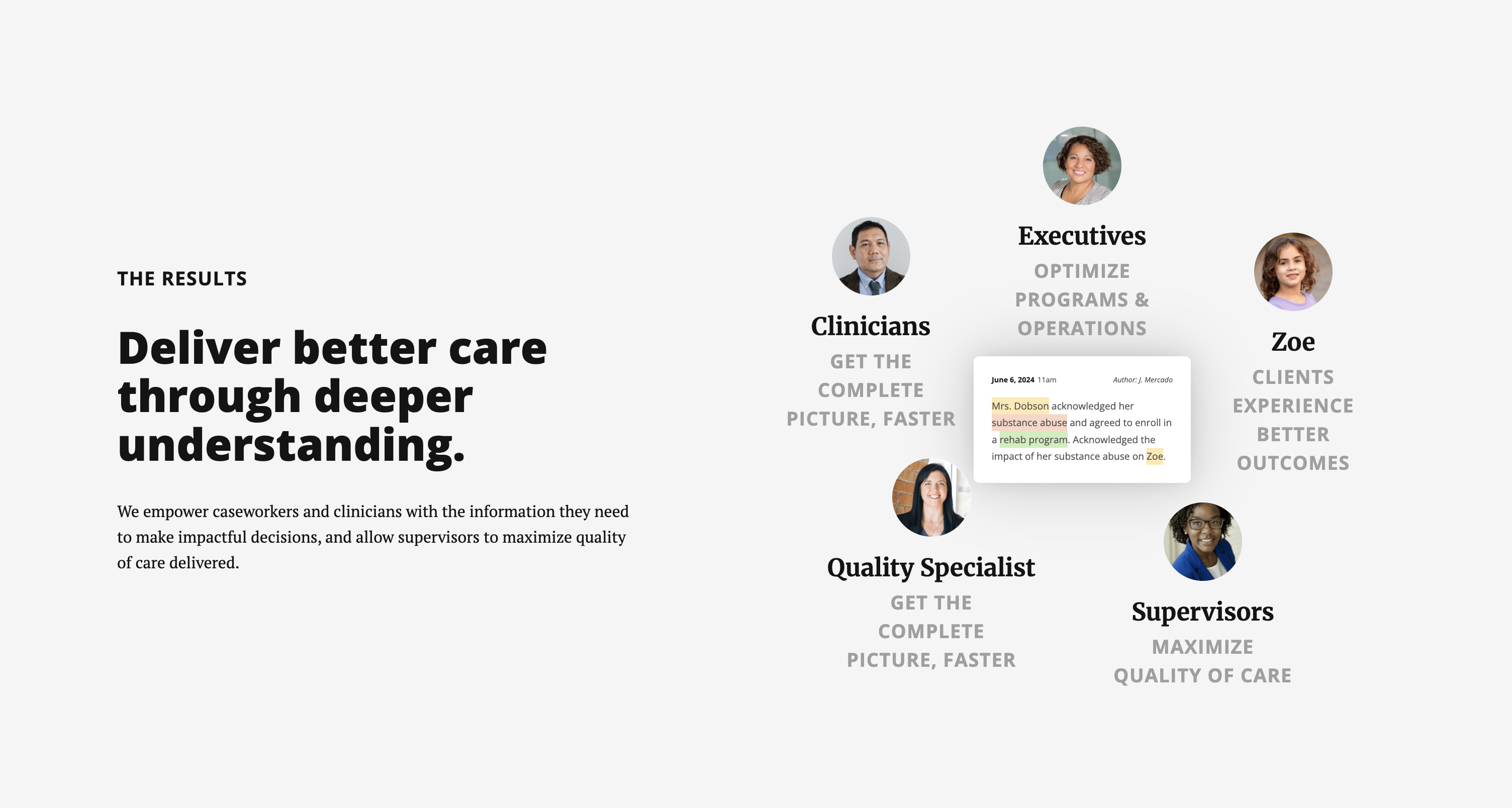

- Caseworkers who use Augintel report saving “weeks of reading” when inheriting complex, years-long cases

- Internally, Augintel adopted a much stronger research-driven approach to iteration, strengthened by a strong collaborative team. This practice has persisted beyond my tenure

- The company’s committment to human-centered design and tech development has established its reputation as an extremely ethical voice in AI for social services

Reflection

Looking back, what has stayed with me most was not the sophistication of the models, but the asymmetry of power they introduced. Caseworkers bore responsibility without authorship; families felt the weight of decisions they could not inspect. Design, in this context, became a way of rebalancing—not by claiming neutrality, but by making values visible.

I joined Augintel because I believe fairness is a design problem as much as a data one. My aim was to help the team see that the pursuit of fairness isn’t naïve—it’s necessary, even if unattainable. The project deepened my conviction that design’s role in AI isn’t just communication—it’s conscience.